Introduction

Ptmalloc is the memory allocator used in libc. I am planning to give a detailed introduction on ptmalloc in two parts. This post is the first. In this post, I will introduce the common data structures used in ptmalloc and present the work flow of allocation procedure in ptmalloc. In the second part, I will present the work flow of deallocation and reallocation procedure in ptmalloc. Furthermore, I will also list the security checks used in ptmalloc. I use the source code of libc-2.25 for demonstration.

Ptmalloc Chunk

The basic unit of memory management in ptmalloc is malloc_chunk. It is consisted of 6 metadata field. As explained below, the size of each metadata is 4 bytes long on x86 platform and 8 bytes long on x64 platform. In the following of this post, we will only discuss the situation under x86 platform.

#ifndef INTERNAL_SIZE_T

# define INTERNAL_SIZE_T size_t

#endif

#define SIZE_SZ (sizeof (INTERNAL_SIZE_T))

struct malloc_chunk;

typedef struct malloc_chunk* mchunkptr;

struct malloc_chunk {

INTERNAL_SIZE_T mchunk_prev_size; /* Size of previous chunk (if free). */

INTERNAL_SIZE_T mchunk_size; /* Size in bytes, including overhead. */

struct malloc_chunk* fd; /* double links -- used only if free. */

struct malloc_chunk* bk;

/* Only used for large blocks: pointer to next larger size. */

struct malloc_chunk* fd_nextsize; /* double links -- used only if free. */

struct malloc_chunk* bk_nextsize;

};

To avoid potential confusion, we need to emphasise the concept of chunk first. In ptmalloc, a chunk refers to a memory area allocated via memory management allocator and is used to store the metadata and application data.

In ptmalloc, there are three basic types of chunks in ptmalloc: allocated chunk, freed chunk and top chunk. Before introducing these three chunks, we take a look at the chunk operation first. From those operationd, we can observe that the last three bits in mchunk_size to denote the status of chunk:

A: set if the chunk was obtained from a non-main arena.

M: set if the chunk was obtained with mmap().

P: set if previous adjacent chunk is in use.

Usually, in CTF challenge we only need to focus on P bit (0x1) for heap exploitation. Here P is 0x1 if the adjacent chunk is in use and 0x0 if the adjacent chunk is freed. Here previous adjacent chunk represents the chunk which is just located before current chunk in memory. This concept is different from the forward chunk, which will be fully discussed later, in chunk management.

/* conversion from malloc headers to user pointers, and back */ #define chunk2mem(p) ((void*)((char*)(p) + 2*SIZE_SZ)) #define mem2chunk(mem) ((mchunkptr)((char*)(mem) - 2*SIZE_SZ)) /* size field is or'ed with PREV_INUSE when previous adjacent chunk in use */ #define PREV_INUSE 0x1 /* extract inuse bit of previous chunk */ #define prev_inuse(p) ((p)->mchunk_size & PREV_INUSE) /* size field is or'ed with IS_MMAPPED if the chunk was obtained with mmap() */ #define IS_MMAPPED 0x2 /* check for mmap()'ed chunk */ #define chunk_is_mmapped(p) ((p)->mchunk_size & IS_MMAPPED) /* size field is or'ed with NON_MAIN_ARENA if the chunk was obtained from a non-main arena. This is only set immediately before handing the chunk to the user, if necessary. */ #define NON_MAIN_ARENA 0x4 /* Check for chunk from main arena. */ #define chunk_main_arena(p) (((p)->mchunk_size & NON_MAIN_ARENA) == 0) /* Mark a chunk as not being on the main arena. */ #define set_non_main_arena(p) ((p)->mchunk_size |= NON_MAIN_ARENA) #define SIZE_BITS (PREV_INUSE | IS_MMAPPED | NON_MAIN_ARENA) /* Get size, ignoring use bits */ #define chunksize(p) (chunksize_nomask (p) & ~(SIZE_BITS)) /* Like chunksize, but do not mask SIZE_BITS. */ #define chunksize_nomask(p) ((p)->mchunk_size) /* Ptr to next physical malloc_chunk. */ #define next_chunk(p) ((mchunkptr) (((char *) (p)) + chunksize (p))) /* Size of the chunk below P. Only valid if prev_inuse (P). */ #define prev_size(p) ((p)->mchunk_prev_size) /* Set the size of the chunk below P. Only valid if prev_inuse (P). */ #define set_prev_size(p, sz) ((p)->mchunk_prev_size = (sz)) /* Ptr to previous physical malloc_chunk. Only valid if prev_inuse (P). */ #define prev_chunk(p) ((mchunkptr) (((char *) (p)) - prev_size (p))) /* Treat space at ptr + offset as a chunk */ #define chunk_at_offset(p, s) ((mchunkptr) (((char *) (p)) + (s))) /* extract p's inuse bit */ #define inuse(p) \ ((((mchunkptr) (((char *) (p)) + chunksize (p)))->mchunk_size) & PREV_INUSE) /* set/clear chunk as being inuse without otherwise disturbing */ #define set_inuse(p) \ ((mchunkptr) (((char *) (p)) + chunksize (p)))->mchunk_size |= PREV_INUSE #define clear_inuse(p) \ ((mchunkptr) (((char *) (p)) + chunksize (p)))->mchunk_size &= ~(PREV_INUSE) /* check/set/clear inuse bits in known places */ #define inuse_bit_at_offset(p, s) \ (((mchunkptr) (((char *) (p)) + (s)))->mchunk_size & PREV_INUSE) #define set_inuse_bit_at_offset(p, s) \ (((mchunkptr) (((char *) (p)) + (s)))->mchunk_size |= PREV_INUSE) #define clear_inuse_bit_at_offset(p, s) \ (((mchunkptr) (((char *) (p)) + (s)))->mchunk_size &= ~(PREV_INUSE)) /* Set size at head, without disturbing its use bit */ #define set_head_size(p, s) ((p)->mchunk_size = (((p)->mchunk_size & SIZE_BITS) | (s))) /* Set size/use field */ #define set_head(p, s) ((p)->mchunk_size = (s)) /* Set size at footer (only when chunk is not in use) */ #define set_foot(p, s) (((mchunkptr) ((char *) (p) + (s)))->mchunk_prev_size = (s))

Allocated Chunk

For an allocated chunk, the size of previous chunk will be set if previous adjacent chunk is freed and P is unset. In the next adjacent chunk, P will be set. One thing to note is that is the current chunk is an allocated chunk, mchunk_prev_size will be used to store application data. Such feature is always combined with off-by-one error in CTF challenge.

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of previous chunk |<= chunk +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of chunk, in bytes |A|M|P| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | User data starts here... .<= mem . . . (malloc_usable_size() bytes) . . | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | (size of chunk, but used for application data) |<= next chunk +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of next chunk, in bytes |A|0|1| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

Freed Chunk

For an freed chunk, the size of previous chunk will be set if previous adjacent chunk is freed and P is unset. In the next adjacent chunk, P will be unset and mchunk_prev_size will be set to the size of current chunk. Forward pointer and back pointer will be set according to the deallocation strategy, which is decided by the size of current chunk and discussed next.

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of previous chunk |<= chunk +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of chunk, in bytes |A|0|P| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Forward pointer to next chunk in list | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Back pointer to previous chunk in list | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Unused space (may be 0 bytes long) . . . . | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of chunk, in bytes |<= next chunk +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of next chunk, in bytes |A|0|0| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

Top Chunk

In top chunk, the size of chunk represents the remaining size of main arena at present. If the new size if larger than the current size, brk() or mmap() will be called to enlarge the top chunk.

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of previous chunk |<= top chunk +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ | Size of chunk, in bytes |A|0|P| +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+ + + | | + + . . . | +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

Chunk Management

After understanding the malloc chunks in ptmalloc, we then come to how the chunks are managed via memory allocator. In ptmalloc, there are four types of bins to store different types of freed chunk: Fastbin, Unsorted bin, Small bins, Large bins. Structure malloc_state is used to store the top chunk pointer, last remainder, fast bins and bins as shown below.

struct malloc_state

{

/* Serialize access. */

__libc_lock_define (, mutex);

/* Flags (formerly in max_fast). */

int flags;

/* Fastbins */

mfastbinptr fastbinsY[NFASTBINS];

/* Base of the topmost chunk -- not otherwise kept in a bin */

mchunkptr top;

/* The remainder from the most recent split of a small request */

mchunkptr last_remainder;

/* Normal bins packed as described above */

mchunkptr bins[NBINS * 2 - 2];

/* Bitmap of bins */

unsigned int binmap[BINMAPSIZE];

/* Linked list */

struct malloc_state *next;

/* Linked list for free arenas. Access to this field is serialized

by free_list_lock in arena.c. */

struct malloc_state *next_free;

/* Number of threads attached to this arena. 0 if the arena is on

the free list. Access to this field is serialized by

free_list_lock in arena.c. */

INTERNAL_SIZE_T attached_threads;

/* Memory allocated from the system in this arena. */

INTERNAL_SIZE_T system_mem;

INTERNAL_SIZE_T max_system_mem;

};

To give a direct view on malloc_state, we list a malloc_state in memory below. 0x804b0a8 is the top chunk pointer, fastbinsY is located at 0xf7fac788 and its length is 10. Unsorted bin, small bin and large bin are located together in bins, which starts at 0xf7fac7b0.

0xf7fac780: 0x00000000 0x00000001 0x00000000 0x00000000 0xf7fac790: 0x00000000 0x00000000 0x00000000 0x00000000 0xf7fac7a0: 0x00000000 0x00000000 0x00000000 0x00000000 0xf7fac7b0: 0x0804b0a8 0x00000000 0xf7fac7b0 0xf7fac7b0 0xf7fac7c0: 0xf7fac7b8 0xf7fac7b8 0xf7fac7c0 0xf7fac7c0 0xf7fac7d0: 0xf7fac7c8 0xf7fac7c8 0xf7fac7d0 0xf7fac7d0 0xf7fac7e0: 0xf7fac7d8 0xf7fac7d8 0xf7fac7e0 0xf7fac7e0 0xf7fac7f0: 0xf7fac7e8 0xf7fac7e8 0xf7fac7f0 0xf7fac7f0 0xf7fac800: 0xf7fac7f8 0xf7fac7f8 0xf7fac800 0xf7fac800 0xf7fac810: 0xf7fac808 0xf7fac808 0xf7fac810 0xf7fac810

Ptmalloc Allocation

This chapter is divided into two parts. The first part will discuss the allocation strategy in ptmalloc based on the source code of libc. This part may look boring and tedious. The second part will give a summary of the details discussed in the first part as [1]. Uninterested reader can jump to the second part to read the conclusion.

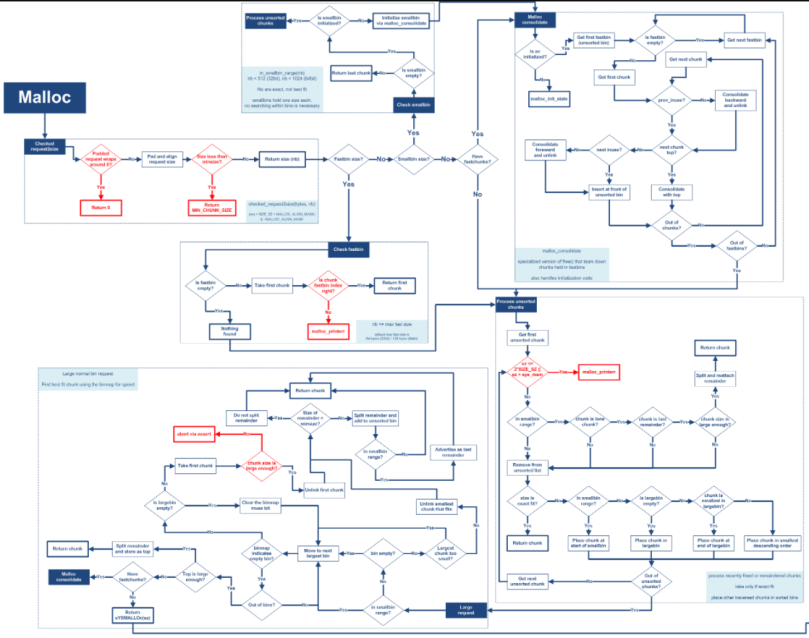

Malloc internal

Based on the source code in malloc.c and the work flow of malloc given below, I will present the corresponding code for each part at source code level.

Function malloc_init_state

#define NBINS 128

#define NSMALLBINS 64

#define SMALLBIN_WIDTH MALLOC_ALIGNMENT

#define SMALLBIN_CORRECTION (MALLOC_ALIGNMENT > 2 * SIZE_SZ)

#define MIN_LARGE_SIZE ((NSMALLBINS - SMALLBIN_CORRECTION) * SMALLBIN_WIDTH)

#define in_smallbin_range(sz) \

((unsigned long) (sz) < (unsigned long) MIN_LARGE_SIZE)

#ifndef DEFAULT_MXFAST

#define DEFAULT_MXFAST (64 * SIZE_SZ / 4)

#endif

#define set_max_fast(s) \

global_max_fast = (((s) == 0) \

? SMALLBIN_WIDTH : ((s + SIZE_SZ) & ~MALLOC_ALIGN_MASK))

#define get_max_fast() global_max_fast

malloc_init_state (mstate av)

{

int i;

mbinptr bin;

/* Establish circular links for normal bins */

for (i = 1; i fd = bin->bk = bin;

}

set_noncontiguous (av);

if (av == &main_arena)

set_max_fast (DEFAULT_MXFAST);

av->flags |= FASTCHUNKS_BIT;

av->top = initial_top (av);

}

At first, the malloc_init_state is invoked to initialise the malloc_state. In this process, the fd and bk pointer of each element in bins are set to the address of it. And global_max_fast is set to be 0x40.

Function malloc_consolidate

malloc_consolidate will try to merge the freed chunks in fastbins and move them into the unsorted bin. After retrieving a freed chunk from fastbin, the merging sequence is as following:

Check if adjacent previous chunk is use: If yes, merge the current chunk into the previous adjacent chunk.

Check if adjacent next chunk is top chunk: If yes, set current chunk as top chunk and modify chunk_size. If no, go to next step.

Check if adjacent next chunk is in use: If yes, just move the current chunk into unsorted bin and unset the P bit of next chunk. If no, merge the next chunk into the current chunk, move the current chunk into unsorted bin and unset the P bit of current next adjacent chunk.

/*

------------------------- malloc_consolidate -------------------------

malloc_consolidate is a specialized version of free() that tears

down chunks held in fastbins. Free itself cannot be used for this

purpose since, among other things, it might place chunks back onto

fastbins. So, instead, we need to use a minor variant of the same

code.

Also, because this routine needs to be called the first time through

malloc anyway, it turns out to be the perfect place to trigger

initialization code.

*/

static void malloc_consolidate(mstate av)

{

mfastbinptr* fb; /* current fastbin being consolidated */

mfastbinptr* maxfb; /* last fastbin (for loop control) */

mchunkptr p; /* current chunk being consolidated */

mchunkptr nextp; /* next chunk to consolidate */

mchunkptr unsorted_bin; /* bin header */

mchunkptr first_unsorted; /* chunk to link to */

/* These have same use as in free() */

mchunkptr nextchunk;

INTERNAL_SIZE_T size;

INTERNAL_SIZE_T nextsize;

INTERNAL_SIZE_T prevsize;

int nextinuse;

mchunkptr bck;

mchunkptr fwd;

/*

If max_fast is 0, we know that av hasn't

yet been initialized, in which case do so below

*/

if (get_max_fast () != 0) {

clear_fastchunks(av);

unsorted_bin = unsorted_chunks(av);

/*

Remove each chunk from fast bin and consolidate it, placing it

then in unsorted bin. Among other reasons for doing this,

placing in unsorted bin avoids needing to calculate actual bins

until malloc is sure that chunks aren't immediately going to be

reused anyway.

*/

maxfb = &fastbin (av, NFASTBINS - 1);

fb = &fastbin (av, 0);

do {

p = atomic_exchange_acq (fb, NULL);

if (p != 0) {

do {

check_inuse_chunk(av, p);

nextp = p->fd;

/* Slightly streamlined version of consolidation code in free() */

size = chunksize (p);

nextchunk = chunk_at_offset(p, size);

nextsize = chunksize(nextchunk);

if (!prev_inuse(p)) {

prevsize = prev_size (p);

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

unlink(av, p, bck, fwd);

}

if (nextchunk != av->top) {

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

if (!nextinuse) {

size += nextsize;

unlink(av, nextchunk, bck, fwd);

} else

clear_inuse_bit_at_offset(nextchunk, 0);

first_unsorted = unsorted_bin->fd;

unsorted_bin->fd = p;

first_unsorted->bk = p;

if (!in_smallbin_range (size)) {

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

set_head(p, size | PREV_INUSE);

p->bk = unsorted_bin;

p->fd = first_unsorted;

set_foot(p, size);

}

else {

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

}

} while ( (p = nextp) != 0);

}

} while (fb++ != maxfb);

}

else {

malloc_init_state(av);

check_malloc_state(av);

}

}

Function __int_malloc

__libc_malloc is the function that returns the requested chunk for application from bins or main_arena. Now let’s discuss __int_malloc, the internal implementation of malloc in libc.

checked_request2size (bytes, nb);

The allocator transforms the requested size into the actual allocated chunk size first. Then the allocator tries to obtain the requested chunk in the following sequence: Fast bin, Unsorted bin, Small bin, Large bin and Top chunk. We will introduce those one by one.

Fast bin

/*

If the size qualifies as a fastbin, first check corresponding bin.

This code is safe to execute even if av is not yet initialized, so we

can try it without checking, which saves some time on this fast path.

*/

if ((unsigned long) (nb) fd, victim))!= victim);

if (victim != 0)

{

if (__builtin_expect (fastbin_index (chunksize (victim)) != idx, 0))

{

errstr = "malloc(): memory corruption (fast)";

errout:

malloc_printerr (check_action, errstr, chunk2mem (victim), av);

return NULL;

}

check_remalloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}

If the size is less than or equal to global_max_fast, the allocator will try to search through the fastbin to find fit chunk. The index into fastbin is decided by chunk size.

Small bin

/*

If a small request, check regular bin. Since these "smallbins"

hold one size each, no searching within bins is necessary.

(For a large request, we need to wait until unsorted chunks are

processed to find best fit. But for small ones, fits are exact

anyway, so we can check now, which is faster.)

*/

if (in_smallbin_range (nb))

{

idx = smallbin_index (nb);

bin = bin_at (av, idx);

if ((victim = last (bin)) != bin)

{

if (victim == 0) /* initialization check */

malloc_consolidate (av);

else

{

bck = victim->bk;

if (__glibc_unlikely (bck->fd != victim))

{

errstr = "malloc(): smallbin double linked list corrupted";

goto errout;

}

set_inuse_bit_at_offset (victim, nb);

bin->bk = bck;

bck->fd = bin;

if (av != &main_arena)

set_non_main_arena (victim);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}

}

The index into small bin is also decided by chunk size. After identifying the corresponding small bin, the allocator will try to remove the first freed chunk in small bin.

Unsorted bin

while ((victim = unsorted_chunks (av)->bk) != unsorted_chunks (av))

{

bck = victim->bk;

if (__builtin_expect (chunksize_nomask (victim) av->system_mem, 0))

malloc_printerr (check_action, "malloc(): memory corruption",

chunk2mem (victim), av);

size = chunksize (victim);

/*

If a small request, try to use last remainder if it is the

only chunk in unsorted bin. This helps promote locality for

runs of consecutive small requests. This is the only

exception to best-fit, and applies only when there is

no exact fit for a small chunk.

*/

if (in_smallbin_range (nb) &&

bck == unsorted_chunks (av) &&

victim == av->last_remainder &&

(unsigned long) (size) > (unsigned long) (nb + MINSIZE))

{

/* split and reattach remainder */

remainder_size = size - nb;

remainder = chunk_at_offset (victim, nb);

unsorted_chunks (av)->bk = unsorted_chunks (av)->fd = remainder;

av->last_remainder = remainder;

remainder->bk = remainder->fd = unsorted_chunks (av);

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

/* remove from unsorted list */

unsorted_chunks (av)->bk = bck;

bck->fd = unsorted_chunks (av);

/* Take now instead of binning if exact fit */

if (size == nb)

{

set_inuse_bit_at_offset (victim, size);

if (av != &main_arena)

set_non_main_arena (victim);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

/* place chunk in bin */

if (in_smallbin_range (size))

{

victim_index = smallbin_index (size);

bck = bin_at (av, victim_index);

fwd = bck->fd;

}

else

{

victim_index = largebin_index (size);

bck = bin_at (av, victim_index);

fwd = bck->fd;

/* maintain large bins in sorted order */

if (fwd != bck)

{

/* Or with inuse bit to speed comparisons */

size |= PREV_INUSE;

/* if smaller than smallest, bypass loop below */

assert (chunk_main_arena (bck->bk));

if ((unsigned long) (size)

bk))

{

fwd = bck;

bck = bck->bk;

victim->fd_nextsize = fwd->fd;

victim->bk_nextsize = fwd->fd->bk_nextsize;

fwd->fd->bk_nextsize = victim->bk_nextsize->fd_nextsize = victim;

}

else

{

assert (chunk_main_arena (fwd));

while ((unsigned long) size fd_nextsize;

assert (chunk_main_arena (fwd));

}

if ((unsigned long) size

== (unsigned long) chunksize_nomask (fwd))

/* Always insert in the second position. */

fwd = fwd->fd;

else

{

victim->fd_nextsize = fwd;

victim->bk_nextsize = fwd->bk_nextsize;

fwd->bk_nextsize = victim;

victim->bk_nextsize->fd_nextsize = victim;

}

bck = fwd->bk;

}

}

else

victim->fd_nextsize = victim->bk_nextsize = victim;

}

mark_bin (av, victim_index);

victim->bk = bck;

victim->fd = fwd;

fwd->bk = victim;

bck->fd = victim;

#define MAX_ITERS 10000

if (++iters >= MAX_ITERS)

break;

}

The allocator will iterate over the unsorted bin, if the first chunk satisfies the following conditions, this chunk will be splitted into the requested size chunk and the remaining chunk. The remaining chunk will be inserted into the unsorted bin again.

(1) The requested size is in small range.

(2) This chunk is the only chunk in unsorted bin.

(3) This chunk is also the last remainder chunk.

(4) The remaining size after split is large enough.

If the size of unsorted chunk is exact fit to the requested size, return the chunk. Otherwise, the iteration over the unsorted bin will continue to check the status of unsorted chunk.

(1) If the unsorted chunk is in small range, the unsorted chunk will be inserted into the corresponding small bin. Then repeat the process above on next unsorted chunk.

(2) Otherwise, if the unsorted chunk is in large range, and the corresponding large bin is empty (bck == fwd), the unsorted chunk will be inserted into the corresponding large bin directly. Then repeat the process above on next unsorted chunk.

(3) Otherwise, if the unsorted chunk is in large range, and the corresponding large bin is not empty. The unsorted chunk will be inserted into the corresponding large bin by descending size order.

After all the unsorted chunks in unsorted bin cannot provide fit chunk as return value, i.e. there is no unsorted chunk or small chunk to provide requested chunk. The allocator continues to the next step.

Large bin

/*

If a large request, scan through the chunks of current bin in

sorted order to find smallest that fits. Use the skip list for this.

*/

if (!in_smallbin_range (nb))

{

bin = bin_at (av, idx);

/* skip scan if empty or largest chunk is too small */

if ((victim = first (bin)) != bin

&& (unsigned long) chunksize_nomask (victim)>= (unsigned long) (nb))

{

victim = victim->bk_nextsize;

while (((unsigned long) (size = chunksize (victim)) bk_nextsize;

/* Avoid removing the first entry for a size so that the skip

list does not have to be rerouted. */

if (victim != last (bin)

&& chunksize_nomask (victim)== chunksize_nomask (victim->fd))

victim = victim->fd;

remainder_size = size - nb;

unlink (av, victim, bck, fwd);

/* Exhaust */

if (remainder_size fd;

if (__glibc_unlikely (fwd->bk != bck))

{

errstr = "malloc(): corrupted unsorted chunks";

goto errout;

}

remainder->bk = bck;

remainder->fd = fwd;

bck->fd = remainder;

fwd->bk = remainder;

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

}

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}

If there is no large chunk in the large bin or the size of the first large chunk in large bin is smaller than the requested size, the allocator will jump to the next step. Otherwise, the allocator will try to find one chunk in current large bin.

The search for large chunk in large bin follows the “best fit” rule, i.e. the smallest chunk whose size is larger than the requested size. After having the discovered the large chunk, remove the chunk from the large bin and calculate the remaining size after splitting. If the remaining size is smaller than the MIN_SIZE, return the whole chunk as return value. Otherwise, split the current chunk and insert the remaining chunk into the unsorted bin.

Top chunk splitting

use_top:

/*

If large enough, split off the chunk bordering the end of memory

(held in av->top). Note that this is in accord with the best-fit

search rule. In effect, av->top is treated as larger (and thus

less well fitting) than any other available chunk since it can

be extended to be as large as necessary (up to system

limitations).

We require that av->top always exists (i.e., has size >=

MINSIZE) after initialization, so if it would otherwise be

exhausted by current request, it is replenished. (The main

reason for ensuring it exists is that we may need MINSIZE space

to put in fenceposts in sysmalloc.)

*/

victim = av->top;

size = chunksize (victim);

if ((unsigned long) (size) >= (unsigned long) (nb + MINSIZE))

{

remainder_size = size - nb;

remainder = chunk_at_offset (victim, nb);

av->top = remainder;

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

If the top chunk is large enough and all processes above cannot return a fit chunk, the top chunk will be splitted into a requested chunk and the reset the top chunk to the remaining chunk.

Summary

First of all, let’s get the summary of common MACROs used in malloc

| X86 | X86-64 | |

|---|---|---|

| SIZE_SZ | 4 | 8 |

| MIN_CHUNK_SIZE | 16 | 32 |

| MALLOC_ALIGNMENT | 8 | 16 |

| MALLOC_ALIGN_MASK | 7 | 15 |

| NBINS | 128 | 128 |

| NFASTBINS | 10 | 10 |

| NSMALLBINS | 64 | 64 |

| SMALLBIN_WIDTH | 8 | 16 |

| DEFAULT_MXFAST | 64 | 128 |

| MAX_FAST_SIZE | 80 | 160 |

| MIN_LARGE_SIZE | 512 | 1024 |

After discussing the internal of ptmalloc above, we will give a summarised conclusion on how each type of bins in ptmalloc are organised and managed. We will also give some sample to demonstrate the memory layout of those bins.

Fast bin

1. Chunks in fast bin are maintained via a singly linked list.

2. Size of chunk in fast bin must be less than 0x40.

3. The P bit of next adjacent chunk of the current chunk in fast bin will not be unset.

3. When retrieving chunks from fast bin, the allocator follows the LIFO rule.

#include

#include

int main()

{

char *p1, *p2, *p3, *p4;

p1 = malloc(0x20);

p2 = malloc(0x20);

p3 = malloc(0x20);

p4 = malloc(0x20);

free(p1);

free(p2);

free(p3);

return 0;

}

/*

(gdb) x/20wx 0xf7fac780

0xf7fac780: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac790: 0x00000000 0x0804b050 0x00000000 0x00000000

0xf7fac7a0: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7b0: 0x0804b0a0 0x00000000 0xf7fac7b0 0xf7fac7b0

0xf7fac7c0: 0xf7fac7b8 0xf7fac7b8 0xf7fac7c0 0xf7fac7c0

(gdb) x/12wx 0x0804b050

0x804b050: 0x00000000 0x00000029 0x0804b028 0x00000000

0x804b060: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b070: 0x00000000 0x00000000 0x00000000 0x00000029

(gdb) x/12wx 0x0804b028

0x804b028: 0x00000000 0x00000029 0x0804b000 0x00000000

0x804b038: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b048: 0x00000000 0x00000000 0x00000000 0x00000029

(gdb) x/12wx 0x0804b000

0x804b000: 0x00000000 0x00000029 0x00000000 0x00000000

0x804b010: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b020: 0x00000000 0x00000000 0x00000000 0x00000029

*/

When next malloc(0x20) is invoked, the allocator will return 0x804b058 (resulted from chunk2mem) for application.

Unsorted bin

1. Chunks in unsorted bin are maintained via a doubly linked list.

2. Size of chunk in unsorted bin must be larger than 0x40.

3. When allocating, the allocator will iterate over the unsorted chunks in the unsorted bin. After finding the fit chunk, remove the chunk from the unsorted chunk and process the chunk.

#include

#include

int main()

{

char *p1, *p2, *p3, *p4;

p1 = malloc(0xa0);

p2 = malloc(0x30);

p3 = malloc(0x100);

p4 = malloc(0x30);

free(p1);

free(p3);

return 0;

}

/*

(gdb) x/20wx 0xf7fac780

0xf7fac780: 0x00000000 0x00000001 0x00000000 0x00000000

0xf7fac790: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7a0: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7b0: 0x0804b220 0x00000000 0x0804b0e0 0x0804b000

0xf7fac7c0: 0xf7fac7b8 0xf7fac7b8 0xf7fac7c0 0xf7fac7c0

(gdb) x/20wx 0x0804b0e0

0x804b0e0: 0x00000000 0x00000109 0x0804b000 0xf7fac7b0

0x804b0f0: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b100: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b110: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b120: 0x00000000 0x00000000 0x00000000 0x00000000

(gdb) x/20wx 0x0804b000

0x804b000: 0x00000000 0x000000a9 0xf7fac7b0 0x0804b0e0

0x804b010: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b020: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b030: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b040: 0x00000000 0x00000000 0x00000000 0x00000000

*/

Small bin

1. Chunks in small bin are also maintained via a doubly linked list.

2. Size of chunk in small bin must be less than 0x200.

3. Different from unsorted chunk, the freed chunk will not be inserted into the small bin after deallocation. Only when a splitted chunk from unsorted bin will be inserted into the small bin (more details will be give in Part 2 of this series tutorial).

4. When retrieving chunks from small bin, the allocator follows the FIFO rule.

#include

#include

int main()

{

char *p1, *p2, *p3, *p4, *p5, *p6;

p1 = malloc(0xa0);

p2 = malloc(0x30);

p3 = malloc(0xa0);

p4 = malloc(0x30);

p5 = malloc(0xa0);

p6 = malloc(0x30);

free(p1);

free(p3);

free(p5);

malloc(0x50);

malloc(0x50);

malloc(0x50);

return 0;

}

/*

(gdb) x/40wx 0xf7fac780

0xf7fac780: 0x00000000 0x00000001 0x00000000 0x00000000

0xf7fac790: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7a0: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7b0: 0x0804b2a0 0x0804b218 0x0804b218 0x0804b218

0xf7fac7c0: 0xf7fac7b8 0xf7fac7b8 0xf7fac7c0 0xf7fac7c0

0xf7fac7d0: 0xf7fac7c8 0xf7fac7c8 0xf7fac7d0 0xf7fac7d0

0xf7fac7e0: 0xf7fac7d8 0xf7fac7d8 0xf7fac7e0 0xf7fac7e0

0xf7fac7f0: 0xf7fac7e8 0xf7fac7e8 0xf7fac7f0 0xf7fac7f0

0xf7fac800: 0x0804b138 0x0804b058 0xf7fac800 0xf7fac800

0xf7fac810: 0xf7fac808 0xf7fac808 0xf7fac810 0xf7fac810

(gdb) x/20wx 0x0804b138

0x804b138: 0x00000000 0x00000051 0x0804b058 0xf7fac7f8

0x804b148: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b158: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b168: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b178: 0x00000000 0x00000000 0x00000000 0x00000000

(gdb) x/20wx 0x0804b058

0x804b058: 0x00000000 0x00000051 0xf7fac7f8 0x0804b138

0x804b068: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b078: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b088: 0x00000000 0x00000000 0x00000000 0x00000000

0x804b098: 0x00000000 0x00000000 0x00000000 0x00000000

*/

Large bin

1. Chunks in large bin are also maintained via a doubly linked list.

2. Size of chunk in large bin must be larger than 0x200.

3. Except for fwd and bck pointer in large chunk, there are also fd_nextsize and bck_nextsize to denote the large chunk with different size (sorted by descending order).

4. Similar to small chunk, the freed large chunk will not be inserted into the small bin after deallocation. Only when a splitted chunk from unsorted bin will be inserted into the large bin.

5. When retrieving chunks from large bin, the allocator follows the “best fit” rule, i.e. the smallest chunk whose size is larger than the requested size.

#include

#include

int main()

{

char *p1, *p2, *p3, *p4, *p5, *p6, *p7, *p8;

p1 = malloc(0x1000);

p2 = malloc(0x30);

p3 = malloc(0x1000);

p4 = malloc(0x30);

p5 = malloc(0x1000);

p6 = malloc(0x30);

p7 = malloc(0x1000);

p8 = malloc(0x30);

free(p1);

free(p3);

free(p5);

free(p7);

malloc(0x810);

malloc(0x810);

malloc(0x840);

malloc(0x840);

return 0;

}

/*

(gdb) x/200wx 0xf7fac780

0xf7fac780: 0x00000000 0x00000001 0x00000000 0x00000000

0xf7fac790: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7a0: 0x00000000 0x00000000 0x00000000 0x00000000

0xf7fac7b0: 0x0804f100 0x00000000 0x0804b848 0x0804b848

0xf7fac7c0: 0xf7fac7b8 0xf7fac7b8 0xf7fac7c0 0xf7fac7c0

0xf7fac7d0: 0xf7fac7c8 0xf7fac7c8 0xf7fac7d0 0xf7fac7d0

0xf7fac7e0: 0xf7fac7d8 0xf7fac7d8 0xf7fac7e0 0xf7fac7e0

0xf7fac7f0: 0xf7fac7e8 0xf7fac7e8 0xf7fac7f0 0xf7fac7f0

0xf7fac800: 0xf7fac7f8 0xf7fac7f8 0xf7fac800 0xf7fac800

0xf7fac810: 0xf7fac808 0xf7fac808 0xf7fac810 0xf7fac810

0xf7fac820: 0xf7fac818 0xf7fac818 0xf7fac820 0xf7fac820

0xf7fac830: 0xf7fac828 0xf7fac828 0xf7fac830 0xf7fac830

0xf7fac840: 0xf7fac838 0xf7fac838 0xf7fac840 0xf7fac840

0xf7fac850: 0xf7fac848 0xf7fac848 0xf7fac850 0xf7fac850

0xf7fac860: 0xf7fac858 0xf7fac858 0xf7fac860 0xf7fac860

0xf7fac870: 0xf7fac868 0xf7fac868 0xf7fac870 0xf7fac870

0xf7fac880: 0xf7fac878 0xf7fac878 0xf7fac880 0xf7fac880

0xf7fac890: 0xf7fac888 0xf7fac888 0xf7fac890 0xf7fac890

0xf7fac8a0: 0xf7fac898 0xf7fac898 0xf7fac8a0 0xf7fac8a0

0xf7fac8b0: 0xf7fac8a8 0xf7fac8a8 0xf7fac8b0 0xf7fac8b0

0xf7fac8c0: 0xf7fac8b8 0xf7fac8b8 0xf7fac8c0 0xf7fac8c0

0xf7fac8d0: 0xf7fac8c8 0xf7fac8c8 0xf7fac8d0 0xf7fac8d0

0xf7fac8e0: 0xf7fac8d8 0xf7fac8d8 0xf7fac8e0 0xf7fac8e0

0xf7fac8f0: 0xf7fac8e8 0xf7fac8e8 0xf7fac8f0 0xf7fac8f0

0xf7fac900: 0xf7fac8f8 0xf7fac8f8 0xf7fac900 0xf7fac900

0xf7fac910: 0xf7fac908 0xf7fac908 0xf7fac910 0xf7fac910

0xf7fac920: 0xf7fac918 0xf7fac918 0xf7fac920 0xf7fac920

0xf7fac930: 0xf7fac928 0xf7fac928 0xf7fac930 0xf7fac930

0xf7fac940: 0xf7fac938 0xf7fac938 0xf7fac940 0xf7fac940

0xf7fac950: 0xf7fac948 0xf7fac948 0xf7fac950 0xf7fac950

0xf7fac960: 0xf7fac958 0xf7fac958 0xf7fac960 0xf7fac960

0xf7fac970: 0xf7fac968 0xf7fac968 0xf7fac970 0xf7fac970

0xf7fac980: 0xf7fac978 0xf7fac978 0xf7fac980 0xf7fac980

0xf7fac990: 0xf7fac988 0xf7fac988 0xf7fac990 0xf7fac990

0xf7fac9a0: 0xf7fac998 0xf7fac998 0xf7fac9a0 0xf7fac9a0

0xf7fac9b0: 0xf7fac9a8 0xf7fac9a8 0xf7fac9b0 0xf7fac9b0

0xf7fac9c0: 0xf7fac9b8 0xf7fac9b8 0xf7fac9c0 0xf7fac9c0

0xf7fac9d0: 0xf7fac9c8 0xf7fac9c8 0xf7fac9d0 0xf7fac9d0

0xf7fac9e0: 0xf7fac9d8 0xf7fac9d8 0xf7fac9e0 0xf7fac9e0

0xf7fac9f0: 0xf7fac9e8 0xf7fac9e8 0xf7fac9f0 0xf7fac9f0

0xf7faca00: 0xf7fac9f8 0xf7fac9f8 0xf7faca00 0xf7faca00

0xf7faca10: 0xf7faca08 0xf7faca08 0xf7faca10 0xf7faca10

0xf7faca20: 0xf7faca18 0xf7faca18 0xf7faca20 0xf7faca20

0xf7faca30: 0xf7faca28 0xf7faca28 0xf7faca30 0xf7faca30

0xf7faca40: 0xf7faca38 0xf7faca38 0xf7faca40 0xf7faca40

0xf7faca50: 0xf7faca48 0xf7faca48 0xf7faca50 0xf7faca50

0xf7faca60: 0xf7faca58 0xf7faca58 {0x0804c858******0x0804e908}

0xf7faca70: 0xf7faca68 0xf7faca68 0xf7faca70 0xf7faca70

0xf7faca80: 0xf7faca78 0xf7faca78 0xf7faca80 0xf7faca80

0xf7faca90: 0xf7faca88 0xf7faca88 0xf7faca90 0xf7faca90

(gdb) x/20wx 0x804c858

0x804c858: 0x00000000 0x000007f1 0x0804d898 0xf7faca60

0x804c868: 0x0804e908 0x0804e908 0x00000000 0x00000000

0x804c878: 0x00000000 0x00000000 0x00000000 0x00000000

0x804c888: 0x00000000 0x00000000 0x00000000 0x00000000

0x804c898: 0x00000000 0x00000000 0x00000000 0x00000000

(gdb) x/20wx 0x804d898

0x804d898: 0x00000000 0x000007f1 0x0804e908 0x0804c858

0x804d8a8: 0x00000000 0x00000000 0x00000000 0x00000000

0x804d8b8: 0x00000000 0x00000000 0x00000000 0x00000000

0x804d8c8: 0x00000000 0x00000000 0x00000000 0x00000000

0x804d8d8: 0x00000000 0x00000000 0x00000000 0x00000000

(gdb) x/20wx 0x804e908

0x804e908: 0x00000000 0x000007c1 0xf7faca60 0x0804d898

0x804e918: 0x0804c858 0x0804c858 0x00000000 0x00000000

0x804e928: 0x00000000 0x00000000 0x00000000 0x00000000

0x804e938: 0x00000000 0x00000000 0x00000000 0x00000000

0x804e948: 0x00000000 0x00000000 0x00000000 0x00000000

*/

Reference

[1]http://angelboy.logdown.com/posts/291983-heap-exploitation

[…] this part of my tutorial, I recommend to read the Summary section in previous one again. I believe you will find more details hidden there. So far, I have finished the tutorial on […]

LikeLiked by 1 person

In your large bins summary:

”

…

Similar to small chunk, the freed large chunk will not be inserted into the small bin after deallocation. Only when a splitted chunk from unsorted bin will be inserted into the large bin.

When retrieving chunks from small bin, the allocator follows the “best fit” rule, i.e. the smallest chunk whose size is larger than the requested size.

…

”

shouldn’t this should be large bin, right?

LikeLike

Yes. You are right. It’s a typo here. It seems that I copy & paste the procedure of small bin, but I forget to correct that. Thanks for your advice.

LikeLike

Hi, Just to clarify under the source code for malloc_init_state, is there an error there?

“`

malloc_init_state (mstate av)

{

int i;

mbinptr bin;

/* Establish circular links for normal bins */

for (i = 1; i fd = bin->bk = bin;

}

“`

I am guessing there could be syntax error.

LikeLike

Perhaps its

“`

malloc_init_state (mstate av)

{

int i;

mbinptr bin;

/* Establish circular links for normal bins */

for (i = 1; i fd = bin->bk = bin;

}

#if MORECORE_CONTIGUOUS

if (av != &main_arena)

#endif

set_noncontiguous (av);

if (av == &main_arena)

set_max_fast (DEFAULT_MXFAST);

atomic_store_relaxed (&av->have_fastchunks, false);

av->top = initial_top (av);

}

“`

LikeLike

Sorry for the spam but I think cant seem to paste it properly too

LikeLike